Recurrent Neural Networks(RNNs) for Sequence Classification

Sequence Modelling

Table of Contents :

- Single Layer Architecture

- Stacked Layer Architecture

Single Layer Architecture

RNN Architecture

Assume you have a dataset X with N features and M datapoints then the RNN architecture would look something like this

You should note that in above architecture weights W, U and V are same across all the timestamps because it’s obvious right in an FFNN same weights are applied to all the input data and hence when you unfold the FFNN to get RNN the weights should remain the same !! Researchers call this weight sharing

Hence now we understand that single layer RNNs are nothing but multiple single layer FFNNs connected in a sequence using hidden states

In many paper you will find researchers using following short hand notation of RNNs (generalizing it for timestamp t)

Following video shows animation of how RNNs performs computation internally !!

Learning in RNN

Since we are using RNN to solve a classification task we can use cross entropy loss to train the network, as shown below

Now this optimization can be solved using any optimizer i.e. gradient descent / Adam / AdaGrad / … (stochastic or mini batch version). Below lets try solving it using gradient descent

Now to calculate these derivates we will take help of computation graph for this RNN architecture which is shown below

Calculating dE / dB

Calculating dE / dV

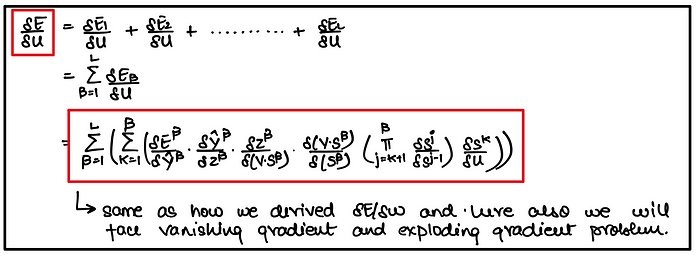

Calculating dE / dW

Calculating dE / dU

Calculating dE / db

How RNN solves drawbacks of FFNNs?

We saw here few issues with FFNN because of which researchers invented RNNs, but did RNNs solve these problems? YES it solves both the FFNN problems as highlighted below

- In RNN we consider the entire sequential information to make the prediction because we are passing hidden states which contain the entire previous sequential information (i.e. about X1, X2 , …. Xi-1) to the next hidden unit in the hidden layer, thus solving the problem.

- Since we have shared weight matrices in RNN, this allows RNNs to also take variable size inputs during inference, thus solving the variable size input problem too!!

Issues with RNNs :

RNNs have two problems namely Vanishing Gradients and Exploding Gradients. To understand these refer to the dE / dW equation

Ideas to solve RNN Issues

These issues can be solved via following manner

- Vanishing Gradients : This can be solved by introducing Skip Connections / Direct Connections (highway connection specifically) into the network

- Exploding Gradients : This can be solved using gradient clipping technique

Stacked Architecture