Graph Representation Learning using Graph Transformers !!

Graph Transformers

Now to learn matured node representations for above type of graph there are many learning tasks that can be defined, we will work in supervised learning domain !! We will see for the graph level regression task but you can use a similar architecture for graph level classification task too !!

Graph Transformers

Method 1 : Global Pooling Method

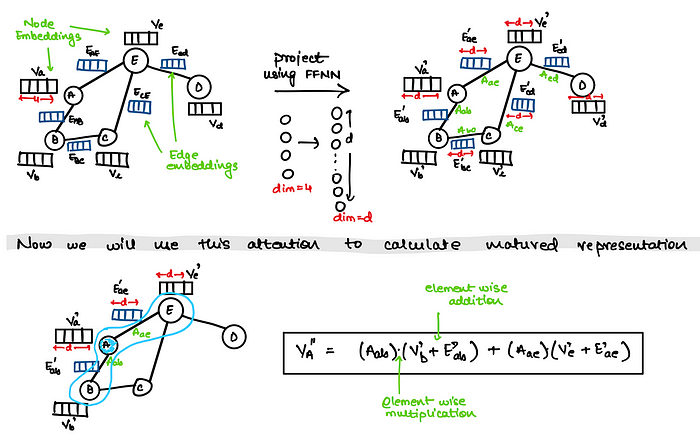

Step 1 : Generating Mature Node Embeddings using Graph Transformers

This is very similar to Graph Attention Network (GAN) that we saw, just that the way we calculate attention is slightly different since we also need to inculcate the edge information!!

Above I have only shown wrt one single head attention, we can similarly have multiple head attention !!

In some other successive papers to this paper, researchers have also tried adding positional encodings !!

Step 2 : Aggregation — Combining Mature Node Embeddings to get Graph Embeddings

Same aggregation methods that you saw in non learning method here.

Code Implementation (using Pytorch Geometric Library)

Method 2 : Dummy Node Method

Same as what we saw in non learning method using GNN just that here instead of using GNN we will use GAN

Method 3 : Hierarchical Pooling Method

Same as what we saw in non learning method using GNN just that here instead of using GNN we will use GAN